Artificial intelligence (AI) is a vast and complex domain that is constantly evolving. Its impact ranges from simple algorithms to complex systems capable of simulating learning and decision-making. As this discipline progresses, new terms and concepts emerge that are crucial for a complete understanding. Among these is the “Perceptron,” a foundational concept in the field of machine learning, which has played a central role in the development of neural networks. This specialized article will delve into the theory and application of the perceptron, exploring its history, functionality, and the implications of its evolution in the field of AI.

Introducing the Perceptron: A Machine Learning Pioneer

The perceptron was developed in the 1950s by scientist Frank Rosenblatt. It is considered one of the earliest and simplest types of artificial neural networks. Inspired by the functioning of biological neurons, the perceptron was designed to perform binary classification tasks, essentially deciding whether an input falls into one category or another.

Perceptron Fundamentals

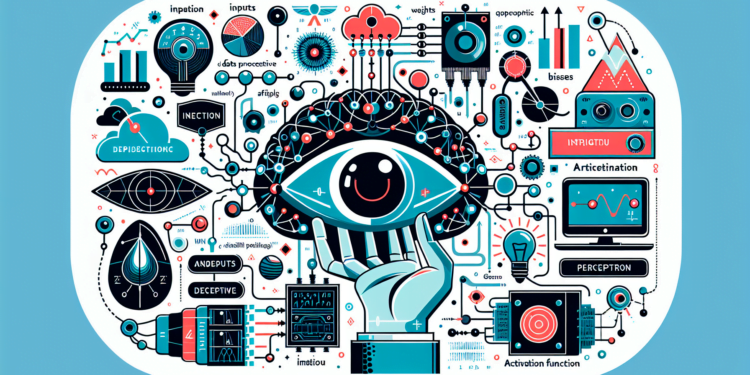

Here, it is essential to describe the basic architecture of a perceptron. It consists of different units called neurons or nodes connected by links. Each link has an associated weight and each neuron a threshold or bias. The perceptron takes multiple inputs, multiplies them by their weights, and adds them. If the result exceeds the threshold, the neuron activates and produces an output; if not, there will be no output.

Perceptron Learning Algorithm

The strength and simplicity of the perceptron lie in its learning algorithm. This process adjusts the weights and threshold based on errors made during the classification of training inputs. Through this iterative process, the perceptron ‘learns’ to classify inputs correctly.

Perceptron Convergence Theorem

The convergence theorem states that if the training data are linearly separable, the perceptron’s algorithm will adjust the weights and bias to find a hyperplane that will correctly separate them. This theorem initially assured the success of the perceptron in applications where linearity was a factor.

Limitations and Advances

However, the perceptron is limited in capability. It cannot solve non-linear problems, such as the well-known XOR problem. This discovery, highlighted in the book “Perceptrons” by Marvin Minsky and Seymour Papert, led to an initial decrease in interest in neural networks during the 1960s and 1970s.

Despite such limitations, the perceptron forms the foundation upon which more complex neural networks are built. The introduction of multi-layer structures and more advanced algorithms, like backpropagation, has allowed AI to overcome the linear barriers and handle tasks of substantial complexity.

Perceptron Applications

The perceptron has been used in a variety of practical applications, including image processing, spam detection in emails, and speech recognition. It has also been an instructive model for understanding how complex systems can be constructed from simple processing units.

Impact on Current Research

Today, while neural network models have evolved far beyond the simple perceptron, its influence remains profound. Many current techniques, such as deep learning, are extensions of the fundamental principles established by the perceptron.

Advanced Technical Considerations

Advanced research continues to explore the capabilities of the perceptron, improving them with new findings in optimization, learning theory, and computational paradigms like support vector machines (SVM) and deep learning. Studies of the intersection between the perceptron and other fields, such as neuroscience, provide valuable understanding by making analogies between artificial and biological networks.

Future Directions and Challenges

While the individual perceptron has been widely surpassed in terms of complexity and capability, its underlying principles remain relevant. Future research may reveal ways to further optimize its architecture and algorithms for new applications, and how the principles of the perceptron could inform the creation of more efficient and powerful machine learning systems. Current trends indicate a growing interest in how understanding the perceptron can contribute to the developing field of Explainable AI (XAI), which aims to make AI more comprehensible to humans.

Conclusion: The Perceptron in the Era of Advanced AI

The perceptron, despite being a simple model, is an indispensable link in the history of AI. Its legacy is fundamental to progressing towards increasingly advanced AI systems. By studying its origin, functionality, and evolution, professionals and scholars can better appreciate the foundations upon which current technology is built while preparing for tomorrow’s innovations.

The purpose of this article has been to delve into the technical aspects and recent advances pertaining to the perceptron, highlighting its ongoing relevance in the shifting landscape of artificial intelligence. The contribution in each line has sought to be of high value without descending into generalities or repetitions, maintaining the technical and precise language that is of so much interest to the specialized audience in this sector.