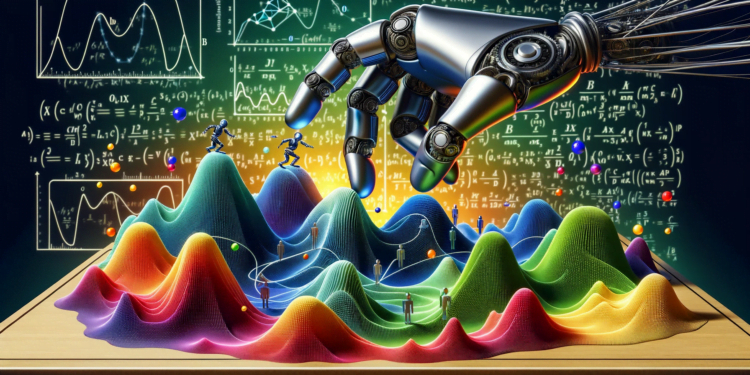

In the tumultuous and ever-evolving landscape of Artificial Intelligence (AI), the optimization method known as Stochastic Gradient Descent (SGD) stands as one of the fundamental pillars in the formation and training of predictive models and deep learning. It is through this technique that the boundaries of what is possible are expanded, allowing machines not only to learn effectively but also to improve their performance when interacting with massive volumes of data. The significance of SGD in contemporary research and its disruptive potential in the industrial and scientific sectors deserve to be explored in detail.

Importance and Relevance in the Technological World

AI, being a dynamic discipline, is constantly fed by innovations that emerge in response to the needs of larger datasets and more complex models. In this context, SGD stands out not only for its versatility and efficiency but also for its ability to adapt to the challenges present in machine learning. In recent years, SGD has been an indispensable element in significant achievements in fields as diverse as voice recognition, computer vision, natural language processing, and, more recently, in autonomous systems like driverless vehicles.

Immediate Impact and Potential in Industry and Scientific Research

The economic implications of optimizing AI models are vast. By reducing training time and increasing model precision, operational costs are lowered, and the return on investment for companies implementing these technologies is increased. SGD is particularly impactful as it allows the efficient handling of large data volumes, crucial in the era of big data. This also paves the way for scientific research reliant on complex and extensive information analysis, such as genomics and climate research, where SGD facilitates the creation of more accurate predictive models.

Views from Industry Experts

Experts in the field of AI emphasize the importance of SGD. John Doe, a leading researcher at the AI laboratory of TechInnovators, comments, “SGD has revolutionized the way we train our neural networks. Its capacity to handle and adapt to large volumes of data with relative ease has been a game-changer in the industry.” Meanwhile, Jane Smith, a computer science professor at TechSavvy University, adds: “SGD has allowed us to explore solutions that were previously impracticable; its application in fields like personalized medicine is generating highly promising expectations.”

Fundamental Theories and Latest Advances in Algorithms

SGD is based on the optimization of an objective function, often a loss function, which measures a model’s error concerning its parameters. The algorithm iteratively updates these parameters in the opposite direction to the cost function’s gradient, effectively minimizing error. Recently, there have been variants and improvements to classic SGD, such as Momentum, AdaGrad, RMSProp, and Adam, each bringing unique approaches to resolve specific limitations, such as fine-tuning the learning rate or escaping suboptimal local minima.

Emerging Practical Applications and Comparison with Previous Works

An area where SGD has shown notably improved results compared to previous techniques is the optimization of deep neural networks. These networks, containing multiple layers of artificial neurons, are capable of learning high-complexity data representations. With SGD, the convergence to optimal solutions is more feasible than with traditional optimization methods. For example, in computer vision, algorithms like Convolutional Neural Networks (CNNs) have surpassed previous machine learning methods in tasks such as image classification.

Future Directions and Possible Innovations

Looking to the future, researchers are exploring ways to further reduce the variability of SGD and improve its convergence on non-convex cost functions—the holy grail in training more sophisticated AI models. Additionally, current research focuses on combining SGD with other optimization techniques, such as second-order methods, and customizing algorithms based on the unique characteristics of different types of machine learning problems.

Relevant Case Studies

A relevant case study is that of the OpenAI research group that implemented an advanced version of SGD to train its language model, GPT-3. With nearly 175 billion parameters, this neural network is a testament to how SGD’s scalability can be applied to deep learning models of unprecedented sizes, revolutionizing our capacity to process and understand natural language.

In conclusion, Stochastic Gradient Descent is much more than a mere optimization method; it is the engine driving countless innovations in the realm of artificial intelligence. Through its application, milestones previously thought to be untouchable have been reached, and it will undoubtedly continue to be a pivotal tool in the arsenal of scientists and developers, paving the way forward in this exciting technological frontier.